I’ve written about how AI is great and AI research will change the world and you should use it to plan your trips, while cheerleaders kick up their heels and wave their pom poms, rah rah sis boom bah.

That’s part of the story. I don’t apologize a bit for being a cheerleader for AI. It’s a great big beautiful tomorrow, if you squint and only look certain directions.

But now it’s time to talk about the dark side of AI and how it’s enshittifying our world, because that’s part of the story too.

Enshittification was the Word Of The Year in 2023, according to the American Dialect Society.

It’s a great term because you immediately know what it means even if you’ve never heard it before. Cory Doctorow coined the term to describe why internet platforms are degrading so quickly and thoroughly. His insight explains so much of what is happening to make the internet - and our world - worse. The process of enshittification:

Stage 1: Platforms are good to their users. Think back on your early experiences with Amazon or Facebook or Google Search.

Stage 2: Platforms abuse their users to make things better for their business customers.

Stage 3: Platforms abuse those business customers to claw back all the value for themselves and their shareholders.

(Doctorow’s essay for Financial Times expanding and elaborating on the causes and consequences of enshittification is a marvel. It’s a long read but worth the time.)

Devices

The Great Enshittening is not just about online platforms. Doctorow wrote the story “Unauthorized Bread” in the collection Radicalized about a toaster that doesn’t work unless you use a particular brand of bread. Boy, that’s a silly concept, huh?

Last week Synology announced that its consumer storage devices will not work with anything other than Synology-branded hard drives, a change in policy that has no benefit for consumers - zero, none, nada, Synology doesn’t even pretend differently. Garmin is putting more features that used to be free in a “premium” tier behind a $7/month paywall. The CEO claims people love it. Nespresso uses patents and bar codes to make sure that most third party pods are not compatible with my coffee maker. Some HP printers have hardware blocks so they will not print with third-party ink cartridges. Every day there are more devices that require subscriptions and there are more restrictions on how we can use things that we own.

AI Slop

But let’s focus on AI. The enshittification of everything is accelerating as low-quality AI-generated slop floods online platforms and crowds out genuine humans.

One of the things that the new AI tools do is generate a huge amount of content very quickly and cheaply.

Unpleasant human beings are using AI tools to create slop.

AI is being used to create tens of thousands of websites stuffed with articles about nothing; to manipulate streaming royalties on music platforms like Spotify; to generate fake social media engagement through "click farms"; and to create deceptive content farms that plagiarize legitimate news outlets.

What’s the point, you ask? And then you answer, money, because it’s always money. Mostly the AI slop is created to manipulate search engines or social media algorithms to earn money from advertising. AI slop sites heavily rely on "programmatic advertising," where ads are placed automatically. As a result, ads from prominent companies are appearing on these low-quality sites, meaning blue-chip brands are unknowingly helping to fund the proliferation of AI slop.

The scale of AI slop is increasing rapidly - faster than search engines and content moderation algorithms can be updated to deal with it. Every day you’re more likely to be exposed to it when you do a search or scroll Facebook or shop on Amazon or do more or less anything to live life online in 2025.

“Experts estimate that as much as 90 percent of online content may be synthetically generated by 2026” — Europol

Words

Would you notice if you clicked on a link to BBC Sportss? It was (and perhaps still is) an AI generated knock-off. (You see the double “S” at the end, right?) Wired reported recently:

“DoubleVerify, a software platform tracking online ads and media analytics, recently conducted an analysis of a collection of over 200 websites filled with a mixture of seemingly AI-generated content and snippets of news articles cribbed from actual media outlets. According to the analysis, these sites often chose their domain names and designed their websites to mimic those operated by established media brands, including ESPN, NBC, Fox, CBS, and the BBC. Many of these ersatz sites look like legitimate sports news offerings.”

It's been estimated that more than half of longer English-language posts on LinkedIn are AI-generated.

AI can easily scrape and rewrite content from existing sources, often legitimate ones - turbocharged plagiarism. Whole websites are created and filled with AI-generated or rewritten articles. AI is used to create fake author profiles or AI-generated images of people to serve as profile pictures on websites or social accounts.

Kate Knibbs reported for Wired last year about how beloved indie blog "The Hairpin," which ceased publication in 2018, was resurrected in 2024 and stuffed with slapdash AI-generated articles designed to attract search engine traffic. The authors' original bylines were replaced by generic male names of people who don't appear to exist.

There is a surge of AI-generated recipes and food images on Facebook, Instagram, Pinterest, even in cookbooks on Amazon. They often have bizarre ingredient combinations and nonsensical instructions - and they’re polluting your recipe search results.

AI-generated fake books are overwhelming Amazon. The content is low quality (or no quality at all), with misleading covers and fake author biographies. Fake AI-generated reviews are being generated faster than Amazon can police them. Last year the Authors Guild reported a surge of low-quality sham books - among other things, for every anticipated high-profile book, multiple AI-generated scam books would appear shortly after, attempting to steal sales.

Videos

AI-generated videos containing fake stories about public figures have racked up millions of views and receive comments from real people who seem to believe the stories are true. Mother Jones reported last month: “In the wake of Trump’s second election, essentially-anonymous channels have churned out videos telling heartwarming fake stories about players in Trump’s world. Several of the channels have ads in their videos, meaning that the videos are being monetized. . . . Many of the accounts produce multiple videos a day. Stories about cross necklaces, for instance, are especially popular: a video about a fictitious judge demanding that Attorney General Pam Bondi remove a cross necklace has generated 2.8 million views so far.”

Scammers are using AI to mimic the voices and appearances of family members or business executives to trick victims into sending money, divulging sensitive information, and authorizing fraudulent wire transfers.

AI-generated videos are used for misinformation and propaganda, harassment and cyberbullying, and the always popular deepfake pornography.

Music

In September 2024, a North Carolina man was charged with using AI to generate hundreds of thousands of songs and fraudulently obtain millions in royalty payments. Automated tools would create “songs” and assign random names to mimic real artists, then post them to Spotify, Apple Music and YouTube Music, and use bots to artificially stream them.

Up to 10% of all music streamed globally is estimated to be fake, driven by artificial streaming operations. Many Spotify users have reported a noticeable increase in low-quality, generic-sounding tracks appearing in their personalized playlists like "Discover Weekly" and "Release Radar." AI-generated “artists” are proliferating on Spotify with generic or nonsensical names and track titles, significant numbers of “listeners” but no online presence, and no discernible human involvement. Reports have surfaced of AI music apps generating millions of tracks, a tiny fraction of which were removed by Spotify for suspected stream manipulation.

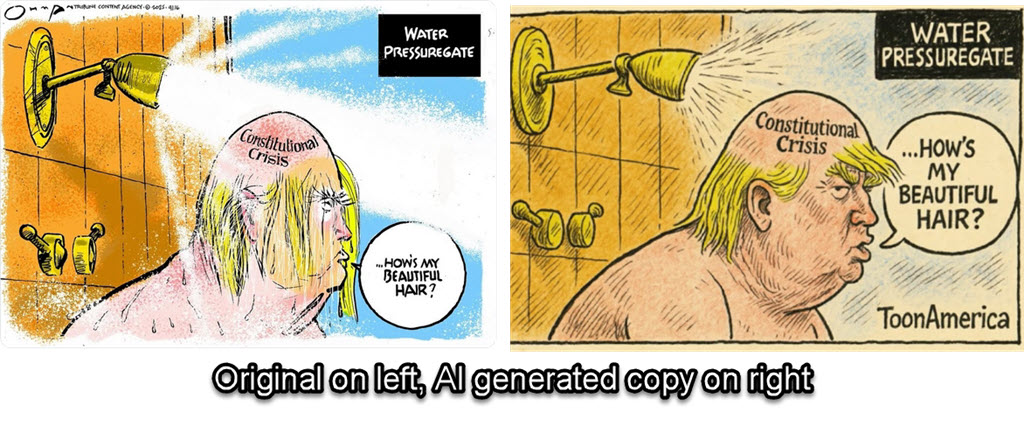

Cartoons

Reported in the Garbage Day newsletter on May 14:

“Political cartoonist Pedro X. Molina discovered that his work, as well as a bunch of other political cartoons, have been victims of generative AI in a way I have to describe as “impressively shameless.” As reported by The Daily Cartoonist, Molina found multiple YouTube accounts had taken these political cartoons and instructed AI image models to reproduce them just differently enough to avoid automatic detection, then added a fake signature and uploaded the knockoffs in batches to YouTube. . . . After digging deeper, I found what seems to be a coordinated network of similar accounts across YouTube and TikTok.”

And there’s more. Blame AI every time you click a link and hit a site loaded with ads where the article doesn’t match the clickbait headline. Whenever your time is wasted by weird AI-generated images. When you talk to an older woman who was targeted because her demographic is more likely to share fake AI-generated images of faux houseplants. When you think you’re going to a reputable site and it takes a while to realize it’s a bogus look-alike. When you play a Spotify track and it’s silent, or the title is gibberish, or the artist obviously isn’t who it’s claimed to be.

When we go online in 2025, we’re wading in a swamp of AI slop.

It’s toxic.

Rah rah sis boom. Bah, humbug.

In his (great) book Anathem, Neal Stephenson describes this as “Artificial Inanity”:

“Well, bad crap would be an unformatted document consisting of random letters. Good crap would be a beautifully typeset, well-written document that contained a hundred correct, verifiable sentences and one that was subtly false. It’s a lot harder to generate good crap. At first they had to hire humans to churn it out. They mostly did it by taking legitimate documents and inserting errors-swapping one name for another, say. But it didn’t really take off until the military got interested.”

https://news.ycombinator.com/item?id=34611001

I like that! And in our world, we managed to get to that result without even involving humans. Progess!