Eli Pariser gave a fascinating talk at TED this year with some new information about online searches. It’s well worth nine minutes of your time. Here’s the link to the video if it doesn’t appear above.

Google, Microsoft, Yahoo, Facebook – all of them are working very hard to show you things that are precisely designed for you. What you don’t know is just how far that’s progressed. It’s already gotten to the point that a page of Google search results for you might be completely different than the results for someone else who searches for exactly the same thing at the same time.

From Pariser’s talk:

One engineer told me there are 57 signals that Google looks at – everything from what kind of computer you’re on, to what kind of browser you’re using, to where you’re located – that it uses to personally tailor your query results.

Think about it for a second. There is no standard Google any more.

It’s hard to see what that means, so Pariser asked friends to Google “Egypt” and send screen shots. Here is a shot of Google results for “Egypt” at about the same time in February for two different people.

There are some obvious differences in page layout but the details are even more striking. In February, when Egypt was collapsing, Scott (on the left) got search results about the crisis, the protests, and the attack on Lara Logan. But Daniel (on the right) did not get any links about current political events on the first page of his Google results. He got links about travels and vacations, and links to general news sources. Something in Google’s algorithms caused it to present completely different information to those two people, based on something it knew (or thought it knew) about each. Amazing!

I see it happen all the time when I do a search for a topic that I’ve written about on the Bruceb News page. My articles will be in the first page of search results on Google! I was very proud of that until I realized that I was the only person seeing the results that way. Do a search for “Samsung Series 9.” I see two of my articles on the first page of Google results. You might, if you’re connected to me through Facebook or the Bruceb News mailing list (which is run by Google Groups). I won’t turn up as one of the top 20 authorities on the new laptop for anyone else.

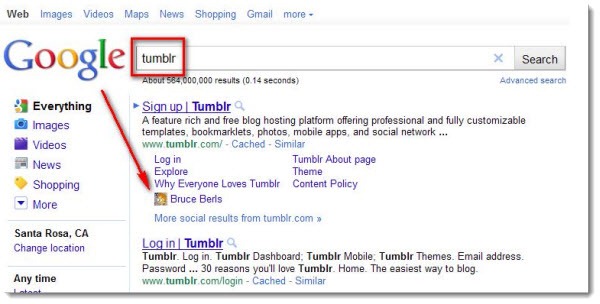

Another example: a client emailed me to let me know that my Tumblr page was showing up on his computer when he did a Google search for “Tumblr.” Again, you won’t see that on your computer unless you’re in some social network with me that Google is aware of.

This will become more striking as we tie ourselves ever more closely to intertwined social networks. Our experience online will be tied to our past behavior – the things we clicked on, how long we stayed on one result before switching to another – and pages will be affected by what our social network “friends” are doing online. It won’t just be search results. Facebook already heavily filters your stream based on your past behavior scrolling down and clicking or not clicking. News sites – Yahoo News, New York Times, Washington Post, more – are looking deeply at how to personalize what you see to make it more likely that it will hold your interest.

They’re giving you what you want. What could be wrong with that?

Pariser warns that we are at risk of losing the guidance of an adult who will sometimes show us what we need to know, in addition to what we want to know. It is already all too easy to read only things that reinforce our beliefs and prejudices and stereotypes and misinformation. The personalization of the web creates a “filter bubble” that leaves out information, and does it invisibly so that we literally don’t know what we’re missing. There is a risk that we will wind up living on a diet of information junk food instead of something more balanced. Human editors of newspapers and magazines also filtered our information but at best they would have a sense of “embedded ethics” that would result in us reading items that were sometimes uncomfortable or challenging or come from different perspectives than our own. The search algorithms running the web may not feel the need to do that, and we might be poorer, not richer, as a result.

Trackbacks/Pingbacks