AI & Society

A series of articles about the most significant technology shift of our lifetime

1 Overview

4 The Failure Of Federal Governance

5 Governance Alternatives

The US federal government has been sidelined by political divisions for 25 years. There will not be any meaningful federal laws or policies to regulate artificial intelligence.

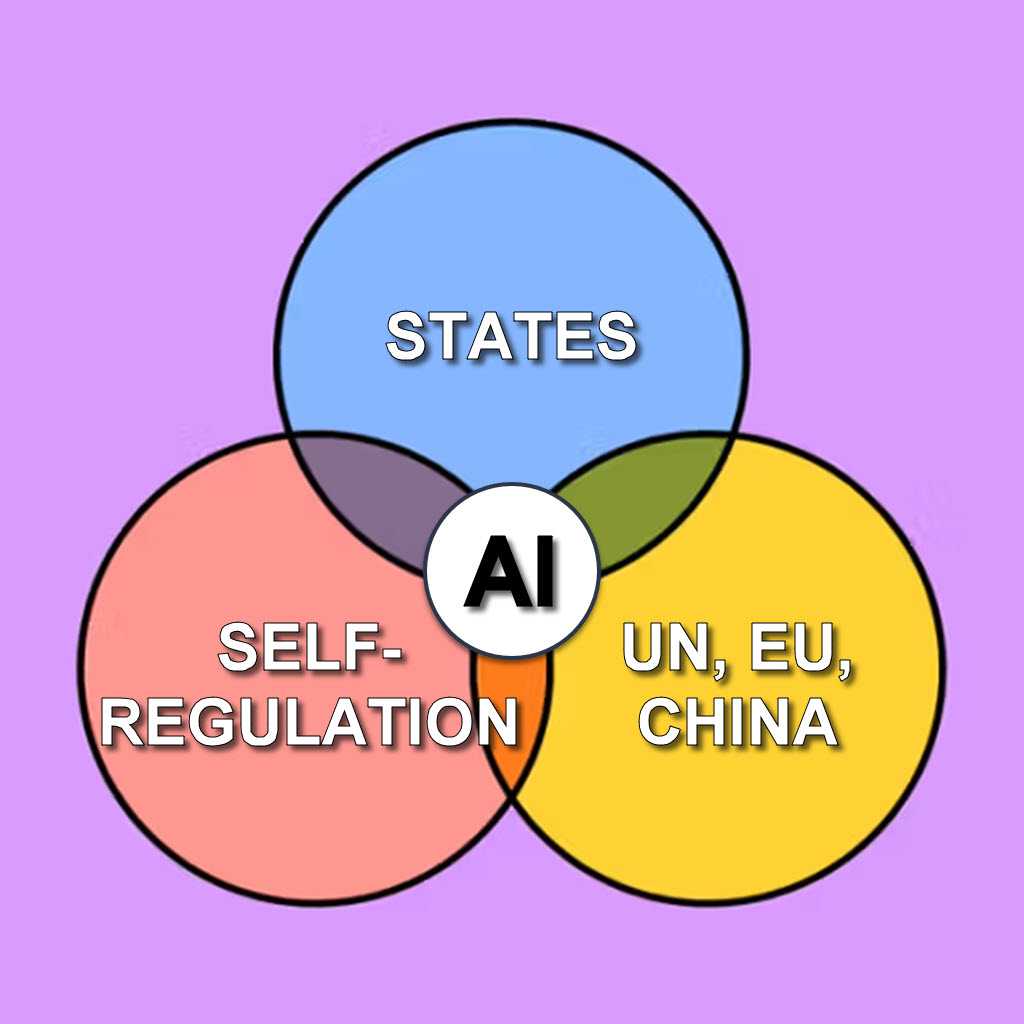

It’s not quite the wild west for tech companies. There are complex and often contradictory alternate sources of governance.

States are acting as regulators of first resort. Most state regulations are focused on specific AI uses that fall within their traditional authority, such as policing, education, and public benefits, but efforts are ongoing to impose broader consumer protections.

The tech companies pinky swear they will behave nicely, promoting voluntary safety frameworks and internal ethics boards as a substitute for binding legislation.

Meanwhile, international bodies are stepping in to create global standards, with the European Union’s rights-focused AI Act, China’s state-centric regulations, and the United Nations’ new initiatives all vying to set the global rules of the road.

Did I mention complex and contradictory?

I can give you a snapshot of the different levels of governance but bear in mind that the landscape is changing constantly. Each of these has a different scope and carries different weight.

The states and technology federalism

On September 29 California Gov. Gavin Newsom signed the Transparency in Frontier Artificial Intelligence Act (S.B. 53). It requires the most advanced A.I. companies to report safety protocols used in building their technologies and forces the companies to report the greatest risks posed by their technologies. The bill also strengthens whistle-blower protections for employees who warn the public about potential dangers the technology poses.

The law is a diluted version of a more rigorous safety bill vetoed last year by Mr. Newsom after a fierce lobbying campaign by the industry against it.

It’s only a start, and a modest one. There are so many more things that can and should be regulated about AI development. But the new law, and the struggle that led to it being watered down – that’s the political system working as it should, with messy compromises but some progress at the end of the day.

As the hub of the global AI industry, California has emerged as a primary battleground for state-level regulation. But it’s not just California. Other states are working on their own laws. In the best case, a state level rule can effectively establish a standard that influences production decisions worldwide. California in particular is a major economic force, and companies frequently adjust their products in other markets to meet California’s legal requirements.

Here are some of the areas where states have passed laws affecting AI to help fill the hole left by the federal government paralysis.

Consequential Decisions: Six states now require human oversight from a physician or other qualified healthcare professional before an AI system can deny a health insurance claim, with nearly two dozen more states introducing similar bills. Other states, such as Illinois and Nevada, are banning or regulating AI for mental health services without licensed clinician oversight. Colorado passed an employment law that requires companies to provide consumer disclosures when using AI for hiring, firing, and promotions.

Public Safety and Law Enforcement: Washington and Colorado require an accountability report, data management, and a warrant or court order for the use of facial recognition technology. Other states have imposed temporary or permanent bans on the technology in specific contexts, such as on body cameras in Oregon and California, or limited its use to serious crimes in Maine and Vermont. If signed, California S.B. 524 will set rules for police using generative AI to write their narrative police reports.

Education: States are forming task forces to address concerns about academic integrity, bias, and data privacy in schools. Policies focus on building AI literacy for educators and students, with Massachusetts establishing an AI Strategic Task Force to produce recommendations and provide resources for its schools.

Having the states step up seems like a good idea to me, what with the whole “try to avoid the extinction of humanity” thing and all.

Not everyone agrees.

Earlier this year the Trump administration tried to shut down state AI regulation so there would be no constraints on the tech companies whatsoever.

In May 2025 Republicans added an amendment to the budget reconciliation bill that would have prohibited states and local governments from passing or enforcing “any law or regulation regulating artificial intelligence models, artificial intelligence systems, or automated decision systems.”

The major tech companies lobbied for the blanket ban, civil rights groups and consumer advocates opposed it. On the last day, the ban was stripped from the budget bill, leaving the states free to act.

For now. “Members of Congress are writing fresh proposals. The administration’s AI Action Plan endorsed a more limited version of the concept. And big tech companies will continue to push for freedom from state-level regulation.”

Voluntary compliance and industry regulation

The giant tech companies are motivated by profits. The only reason they consider ethics and the public interest is to protect their profits. They use their money, their informational advantages, and their technical capabilities to influence policy discussions and regulatory frameworks for their own benefit.

Some of you are wincing and complaining that I’m cynical. Gee, you think? Go ahead, convince me differently, quote to me from the lip service they pay to transparency, accountability, and ethical data use. I have some lip service myself, a few choice words about naivete and late-stage capitalism and greed and my lips pursed while I blow a razzberry brrrrrr.

But the big companies do have market incentives like public trust, brand reputation, and the avoidance of consumer backlash and legal risks. Voluntary controls are not meaningless, but they are literally only a means to enhance profits. There is no tension between ethics and profits in large corporations in 2025. Greed will always win.

Companies are adopting voluntary frameworks like the NIST AI Risk Management Framework and the OECD AI Principles. Those frameworks are filled with words, marvelous words, aspirational words. “Innovative, trustworthy AI that respects human rights and democratic values.” Golly, that sounds great!

Interesting, though, those words are right next to words like “recommendations” and “non-binding.”

There is – and there will always be – a significant gap between intent and implementation of voluntary frameworks.

Enough said.

The UN and global regulation

At the United Nations General Assembly in September, Nobel laureates, government leaders, and technologists spoke and signed statements calling on governments to put safeguards into place to avoid the “unprecedented dangers” ahead, specifically mentioning engineered pandemics, widespread disinformation, and mass unemployment.

In response, the United Nations said it would form two groups to assemble ideas on AI governance and analyze risks and opportunities.

When contacted for a response, the director of the White House Office of Science and Technology Policy said, “I serve a power so ancient and so complete in its malice, that your fleeting morality is less than a child’s tantrum.”

Ah, no sorry, that is an accurate translation but he used different words. His actual statement was: “We totally reject all efforts by international bodies to assert centralized control and global governance of A.I.” Seriously, he said that.

In the absence of American leadership, the European Union has stepped up as an effective regulator of the giant tech companies in areas like antitrust, data protection, privacy, and content moderation. Global companies have to comply with the EU’s rules if they want to operate in the EU market, effectively exporting rules beyond its borders.

Now the EU is turning its attention to AI governance. Last year the EU passed the EU AI Act, which prioritizes human rights and risk mitigation through a strict binding framework. There are hefty penalties for noncompliance.

Meanwhile China has issued a series of binding regulations aimed at various aspects of AI development. At one time the primary focus of the rules was to ensure that AI development does not undermine the state, mandating adherence to “Socialist Core Values” and a “correct political direction.”

The release of DeepSeek in early 2025 accelerated AI adoption in China and positioned China for global leadership in AI governance. The government has signaled it intends to accelerate the development of “relevant laws, regulations, policy systems, application specifications, and ethical guidelines” to shape global AI markets and become the leader for global governance.

There is literally no way to guess what effect Chinese governance will have on the world going forward. All things China are opaque and unpredictable.

“Opaque and unpredictable” kind of sums up everything we’ve been discussing, doesn’t it? I’ll have some brief closing thoughts in the next article.