Google announced a number of new products and services at its developer conference last week. One of them, Google Lens, might change the world. It might be the most important technology announcement in ten years. Of course, it’s just as likely that it will turn out to be nothing important, just another bit of vaporware that is underwhelming when it appears and is abandoned two years later. And yet there’s something about this one that rings true.

We live in a world where in a few years a single app, WeChat, became a preoccupation of a billion Chinese people and is starting to play an outsized role in the country’s economy. Google Lens is the most obvious attempt yet by Google to try for that goal in the rest of the world and become so valuable that we stay in its orbit more and more naturally.

A bit of background will help. I have to lay out Google’s master plan for you to understand why Google Lens has such rich potential.

Google and artificial intelligence

Google controls online advertising and gets 90% of its revenue from ads. That’s a relatively mature business. Google will cultivate it for decades, innovating where it can to follow the trends. But the next generation of technology will be increasingly difficult to monetize with ads. Google has been preparing for a long time to broaden its scope.

Google has given us such rich services that we are giving it more data about ourselves than we give to any other company. Facebook knows a lot about our friends and our interests, Amazon knows what we buy – but Google knows everything. Google Photos is the masterstroke in the plan to acquire more data about us than any other company can hope for. Google Photos is so good that it is quickly becoming the standard method for handling photos around the world, already up to five hundred million monthly active users in just two years and improvements to come that will make it even better. We are uploading 1.2 billion photos and videos to Google every day. Google is analyzing everything about our photos – dates, times, places, the people in the photos, the clothes you’re wearing, the car in the background, the furniture, where you hang out, how much jewelry you wear, where your kids go to school, everything. That’s on top of what it knows from handling your web searches, indexing your mail, tracking your web browsing, storing your files, and oh so much more.

At the developer conference, Google emphasized its work on artificial intelligence and promised that it intends to build AI into all of its products. It will constantly be giving you better feedback and more useful information. Search results will be tailored to what you prefer to find. Google Maps will be more accurate because Street View is learning more from examining the surroundings and reading signs. All of Google’s services will be closely linked to make them work better for you.

Artificial intelligence relies in part on vast amounts of data. The more data you can feed to smart algorithms, the smarter they will become. No other company has a chance of getting the amount of data about us that Google is soaking up every day.

Google Assistant

Google hopes those efforts will cause you to be more dependent on Google Assistant. At first glance, Google Assistant seems to be similar to any other Google Search: ask for something, Google Assistant answers. But Google hopes you’ll quickly find Google Assistant to be more helpful. Google Search results are generally limited to the information on public websites. Google Assistant also looks at your personal data, giving you answers that take into account your appointments, your preferences, your travel plans . . . your life.

Google Assistant is intended to become the primary method for interacting with Google. You mostly use it by talking to it. When you long-push on the home button on some Android phones today (and more to come), Google Assistant will answer. As of last week, Google Assistant can be installed on iPhones. It can’t take over the home button from Siri (Apple won’t allow that), which is a big disadvantage, but you can use it like any other app. If you become familiar with it, you might start to use it instead of the iPhone apps for Google or Chrome.

Google Assistant is the voice that answers if you buy a Google Home device, Google’s answer to the Amazon Echo. Amazon has quite a lead with the Echo products but Google Home is catching up and might already be a better choice. In the meantime there is already little room left for Apple in that brand new market and Microsoft doesn’t stand a chance. As just one example, Google Home will soon be able to make phone calls to any phone in the US for free, with your number integrated so the person you call sees your name and number in caller ID.

That’s just the beginning, though. Google is also moving aggressively to make Google Assistant available in cars. It will be built into new TVs. At the least, new TVs will have Chromecast built in, which Google Assistant will be able to control from your phone. (“OK Google, play Game of Thrones in the living room.” The TV comes on with the episode where you left off.) Google Assistant will be built in or will connect to new smart appliances – voice commands to turn on the oven or check on laundry. There’s more.

You can read more about Google Assistant here. It’s available now for iPhones and some Android Phones. (A bit hard to find in the Apple Store for some reason, but it’s there.) It will be made available for most recent Android phones in the next few months.

Google Lens

Google Lens might tip our world over so that we start using Google Assistant ten times a day, every day.

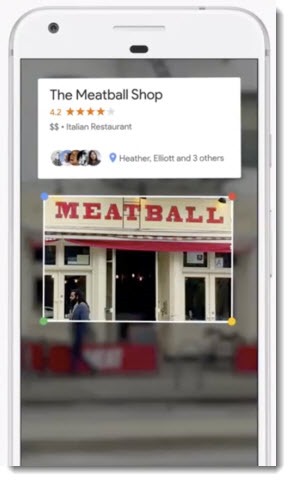

Google is leveraging its research in artificial intelligence and neural nets to be able to identify objects in photos with uncanny accuracy, and then give you information that is useful to you. Google Lens will be built into Google Photos. It will examine your photos and do interesting things. If you take a picture of a flower, Google Lens will tell you what species it is, and perhaps give you a link to a nearby nursery where you can buy it. If you take a picture of a restaurant, Google Lens will look up reviews and let you click to make a reservation. If you take a picture of a sign in another language, Google Lens will translate it.

That’s amazing. But it’s not the breathtaking part.

Google Lens will be built into Google Assistant. When you click on Google Lens, the phone camera will come on and Google Lens will do all the same tasks in real time, adding information you can use to what you see in front of you.

If you’re walking by a theater, you can point Google Lens at the movie poster and immediately see reviews, show times, and a link to buy tickets. It will add it to your calendar and note the address so you can pull it up on Google Maps later.

If you point at a restaurant, you’ll get links to menus, perhaps some discount coupons, a link to make reservations, and reviews posted by your friends.

If you point at the sticker on a router, your phone will read the wifi name and password and automatically join the network – no typing in long security codes.

Google didn’t mention it at the presentation, but one thing jumped into my mind after reading about WeChat: Google Lens will also be able to read QR codes. Maybe this is how they’ll get off the ground in the US. Maybe we’ll find out that the easiest way to transfer contact information is to point Google Lens at the QR code on someone else’s phone.

This is a natural next step into augmented reality. No headset or glasses needed to take this step. Those may come later. For now, we’ll hold our phones up and see the world in front of our eyes but with additional information that we can use. Remember Pokemon Now? It would show you the world with a little cartoon character on top. Google Lens will show you the world with useful data on top.

Google’s chances at pulling this off are better than any other company. The combination of industry-leading research into artificial intelligence, more data about more people than any other company can obtain, and two billion Android devices, gives it a head start that it is working hard to advance.

You can read more about Google Lens here. It will become available in the next few months – no firm timetable yet.

What about money?

We haven’t talked yet about money.

There are obvious sources of revenue in all these plans. Stores will pay Google to have coupons offered when you point Google Lens at a store front, for example.

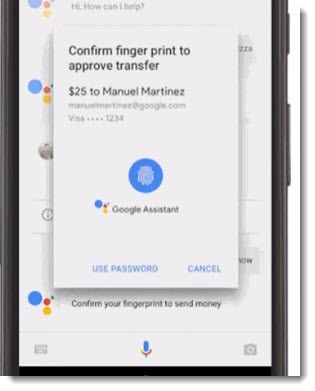

But I think Google has even bigger plans. Google hopes to follow WeChat’s example and integrate easy payment and money transfers into Google Assistant. It will build on Android Pay and Google Wallet so you can buy things online and offline with the credit card stored at Google. You’ll be able to say “Send $50 to Nate Andrews” and Google Assistant will confirm, get your fingerprint, and transfer the money with no fuss. At its conference, Google ordered delivery from Panera and paid for it with a few words to Google Assistant.

Here’s an article about sending money with Google Assistant.

That skyrockets Google into an entirely new realm. If we start using Google Assistant regularly, if Google Lens is as good as we hope and it becomes second nature to point our phone at something to get information about it, if Google builds in payments and money transfers that are fast and secure . . . if, if, if, it’s all a bunch of ifs.

The whole thing is audacious. It’s science fiction. It’s exhilarating and scary.

Unlike other pie in the sky plans, though, it’s also plausible. It builds on Google’s traditional strengths in search, takes advantage of its vast data collection efforts and research in artificial intelligence, and has a clear business plan to monetize it all. There is nothing else going on in technology now to equal Google’s vision.

Other companies are working to get our attention. We may never have one app that monopolizes our attention the way WeChat does in China. But Google is doing everything right today and . . . well, the introduction of Google Lens might be as world-changing as the announcement of the iPhone was ten years ago.

Trackbacks/Pingbacks