Two weeks ago Apple announced that iPhones would begin scanning for photos involving sexual abuse and exploitation of children.

Sounds like a good thing that makes the world a better place, doesn’t it? I’m sure Apple execs expected a burst of good will.

Instead it looks like an unforced error that has set back the careful work done by Apple for the last decade to promote itself as a privacy-focused company. Within a few days, an international coalition of more than ninety policy and civil rights groups published an open letter asking Apple to “abandon its recently announced plans to build surveillance capabilities into iPhones, iPads and other Apple products.” The groups include the American Civil Liberties Union, the Electronic Frontier Foundation, Access Now, Privacy International, and the Tor Project.

There are a lot of things to unpack. Mostly I want to help you understand what Apple announced and how the technology works. Much of the coverage and criticism misses an important distinction: Apple actually announced two completely different things, with different reasons to be troubled about what each might lead to in the future.

And then I’ll speculate – and that’s all it is for everyone, just guesswork – about why Apple decided to do something that undermines the privacy pledges they’ve staked the company’s reputation on for years.

What Apple said it was going to do

Apple announced that two new features will be installed on all iPhones with an upcoming iOS update.

One: Most iPhone users back up their photos with iCloud. When the first new feature is rolled out, each photo will be scanned on the phone just before it is uploaded to iCloud. If the photo matches an image in a database of known child sexual abuse material (details below) and some other threshold requirements are met, then the user’s Apple account will be disabled and the National Center for Missing & Exploited Children will be notified.

There are many safeguards. Nothing is triggered if images are only stored on the phone; there is no scan until an iCloud backup is scheduled. The system only reacts to a “collection” of thirty photos or more, not individual photos. If the thresholds are met, there will be a human review of low resolution versions of the images by Apple employees to confirm that the images match the database photos.

Two: Parents will have the option to turn on an iMessage feature on phones used by children (i.e., signed in with a child account). The phone will then scan all iMessage images sent or received by the child. If the image contains any sexually explicit material, the child will have the option to block the image. If the child opts to view the image, parents will be notified.

Technically this bears zero relationship to the database-matching scan. It’s gotten little attention but it has much higher potential for abuse in the future. We’ll talk about that in the next article.

A really, really short explanation of how the child porn database works

A little background will help you understand the reaction to the first proposal to scan for known Child Sexual Abuse Material. (“CSAM” is the current acronym of choice. I’ll refer to it as “child porn” because it’s easier to read, not to be flippant or disrespectful.)

The International Centre For Missing & Exploited Children (ICMEC) maintains a database of millions of child porn images and videos. No dispute, no ambiguity, these are indisputably awful, illegal pictures gathered up over the last ten or fifteen years.

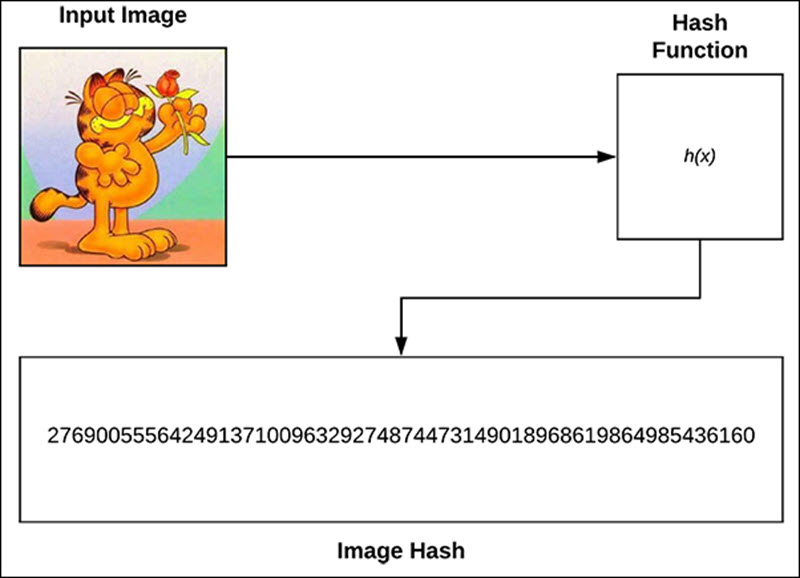

The ICMEC turns each yucky picture into a unique digital string of numbers and letters. Algorithms examine each pixel for intensity, gradients, edges, and relationship to the pixels next to it. Then Magical Math happens and poof! the picture as a whole is reduced to that single string.

The result is a relatively compact database that doesn’t have any pictures in it, just millions of unique sequences of numbers and letters. The ICMEC distributes the database along with software to make it easier for law enforcement to search computers run by likely offenders storing or trading any of the illegal images. The underlying technology was developed by Microsoft – here’s the history. Apple will be using its own custom program but it’s based on the same underlying concepts.

I’m going to use the word “string” a lot. It’s a math thing. Also referred to as a “digital hash” of the picture. When a picture is converted to a string, it looks something like this:

8fca969b64f34edc160a205cb3aa5c86

Each one is unique! You can’t tell anything about the picture from the string, you can’t turn the string back into the picture, but that picture and only that picture will produce that code. Alter even one pixel and you would get a completely different code.

Digital hash programs can turn any picture into a unique string of numbers and letters. Run it against your photos and you’d have a bunch of lines of digital gibberish, one for each picture.

The ICMEC program can compare each string from your photos to the millions of strings in the child porn database. If anything matches exactly, it’s the same picture, Q.E.D., ipso facto, res ipsa loquitur, j’accuse.

But there’s one more bit of Magical Math that we have to take on faith. Apparently it works and it’s very reliable.

The ICMEC program can also do math on those unique hashes to tell if a picture likely started out as one of those child porn pictures – close but not identical. If you (or someone) tried to disguise an illegal photo in the database by cropping it or converting it to black & white or doing a bit of Photoshop work, the ICMEC program can still tell that it matches a photo in the database, just by doing Magical Math.

To be clear: it’s not looking at the pictures. It’s not counting limbs or looking for bodies in a particular position. It’s doing math on the string. The hash. The digital fingerprint. (There are lots of names for the math bits.) If you created a tableau with different people to precisely duplicate one of those illegal images, with everyone in exactly the same position, (1) you are an awful person – seriously, you’re a monster, and (2) the image would not be flagged by the algorithm.

The result is that no photo that you have taken would ever be flagged by the ICMEC program. It is exclusively looking for matches with images in the big database of known badness. But it’s really good at sniffing out those photos, even if someone has tried to fool the algorithms by tweaking the photos.

The ICMEC database and magical-math-string-matching program is routinely used to scan everyone’s photos – your photos! – in OneDrive, Twitter, Facebook, Reddit, and other services. Apple, Google, and Microsoft scan all photos sent by email. The same technology has been used extensively in the last ten years by law enforcement around the world.

Five years ago Facebook, Twitter, Google, and Microsoft started using similar technology to identify extremist content such as terrorist recruitment videos or violent terrorist imagery. It was used to remove al-Qaeda videos. We’ll come back to that when we talk about the reaction to Apple’s announcement.

In the next article, let’s see if we can figure out why privacy and civil liberties groups are freaked out by Apple’s announcement, and think about what might have caused Apple to walk into this controversy.